Resource library

Get inspired and elevate your teaching with Kognity’s latest professional development guides, white papers, blog articles and webinars.

Browse all resources

Filters

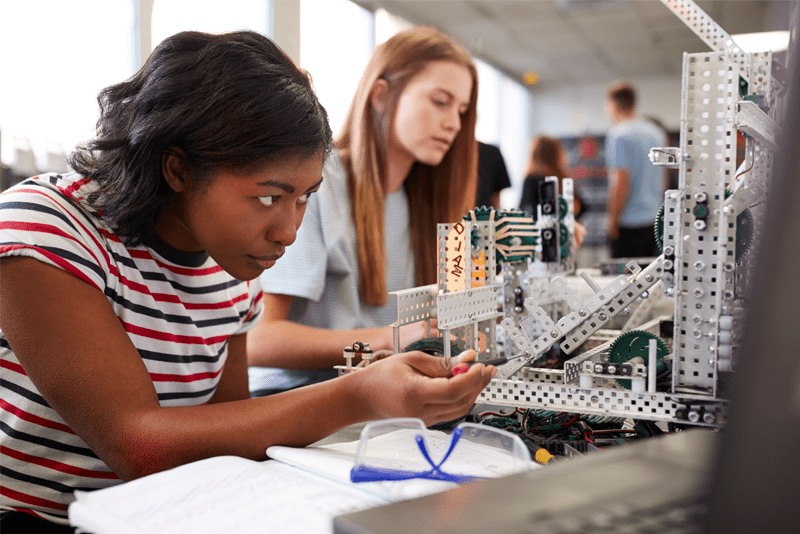

The Power of Inquiry-Based Learning

Discover a transformative approach to teaching and learning. The Next Generation Science Standards (NGSS) introduced a paradigm shift in high school science education. Instead of isolated content areas, NGSS emphasizes Inquiry-Based Learning (IBL)—a dynamic method that sparks curiosity and critical thinking.

Embracing Neurodiversity: 7 Tips for Fostering Inclusive Learning Environments

Science thrives on neurodiversity, the beautiful spectrum of how brains learn and process information. But many classrooms unintentionally create barriers for neurodivergent learners, stifling their potential and dimming the classroom’s brilliance.

Ed Tech in the Neurodiverse Classroom

Learn more

Microsystems of Assessment and You: Getting the Most out of Your Assessment Data

Learn more

Chemistry for All: Inclusion Stories and Teaching Strategies

Learn moreKognity’s learning principles

Alongside learning design experts, our product has been designed around 4 principles; learning should be active, inclusive, applied, and formative.

The Teacher's Guide to Choice Boards: Empower & Engage Students!

Learn more

Bring STEM to Class

Learn more